To deepen wonder and advance understanding of our natural world—past and present—in order to embrace responsibility for our collective future.

Carnegie Museum of Natural History’s core exhibition featuring real dinosaur fossils.

The ‘Dinosaurs in Their Time’ exhibit holds significant importance and value both in the realm of academic research and in public life.

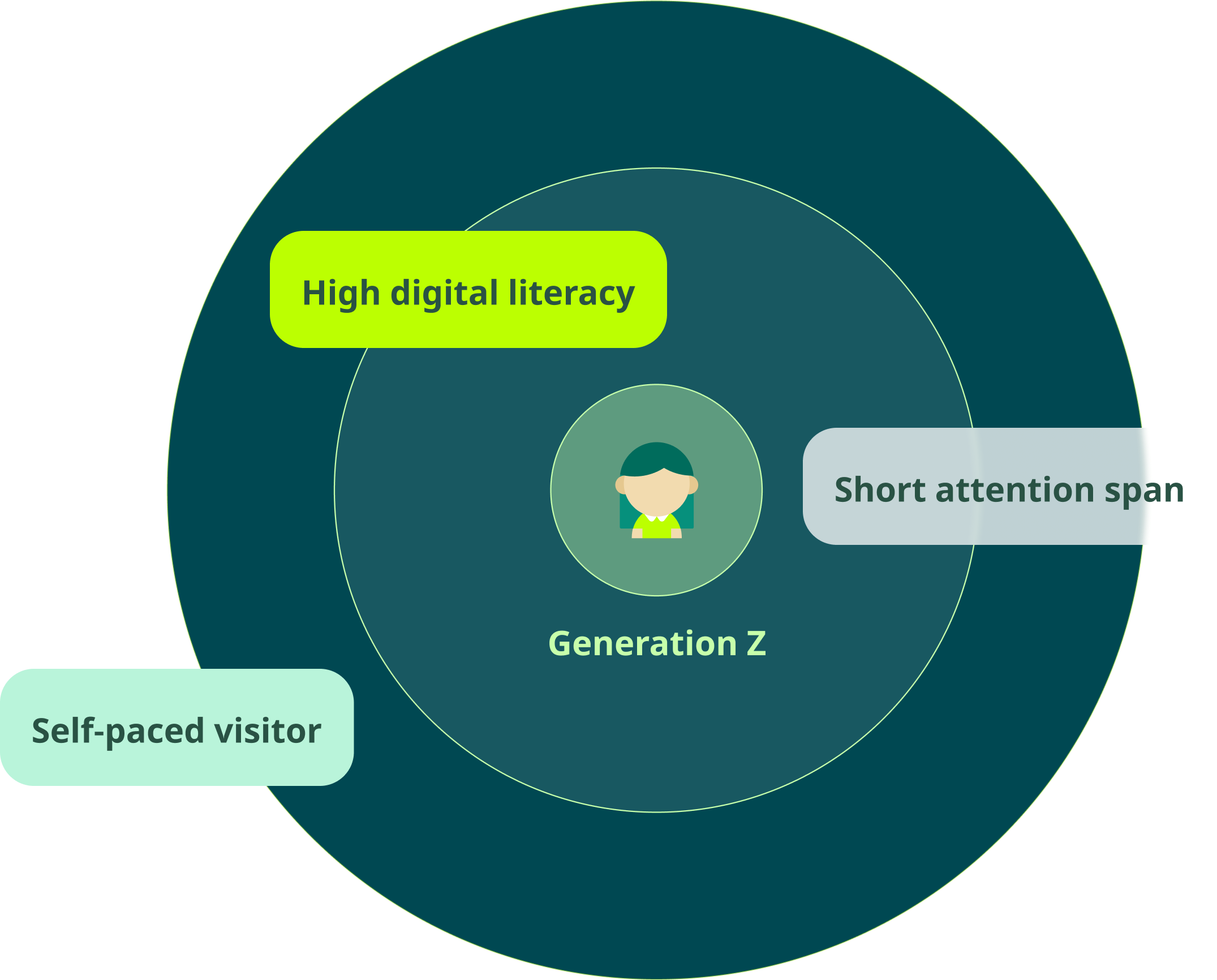

Generation Z visitors who are characterised by shorter attention span and high digital literacy supported.

It may be more challenging for them to stay focused on traditional, less interactive museum such as the ‘Dinosaurs in their Time’, potentially impacting their ability to retain information presented in these formats.

By incorporating XR interactions and augmented reality, we aim to spark curiosity and enhance learning through immersive storytelling.

Our goal is to convert passing interests into sustained engagement ultimately envisioning CMNH as an interactive, participatory museum.

To address our research question in our design, we followed these four design principles:

Watch this film to experience the multi-sensory journey with dinosaurs in extended-reality by yourself.

We transform distant historical facts into engaging, relatable narratives that resonate with the modern-day experiences of the guests.

Here are the key moments of our user journey following the below path:

The personalities of the dinosaurs are presented, and the visitors are encouraged to choose one that they are most emotionally resonated to.

By mimicking the selected dinosaur’s posture, the visitors finish the transformation.

Taking the mixed-reality headset and collector, understanding how to use the collector

Approaching and lingering at a painting for over 2.5 seconds activates an informative display. Information appears in the head-up display.

Before entering a new period, visitors will be stopped temporarily by a virtual boundary and introduced to how the current period ended and the dinosaur they evolved into.

The experience let visitors appreciate the authentic dinosaur and the information and stories spatially together by using guided hand gestures.

In front of each dinosaur fossil, there is an incubator for the users to place the collector in and trigger the interaction.

Visitors can collect interesting dinosaur features by grabbing and place them onto the collector. In the end, users will get a personalized path collector, recording their journey.

The talk provides visitors with a more insightful and meaningful experience.

Narration focuses on vividly conveying the exhibit's core theme: the evolution path, designed to inspire visitors with the message: "Life will find a way."

Exiting the exhibit, visitors scan their egg tickets to receive a digital version of their collected skeletons. This digital memento serves as a tangible reminder of their journey, allowing them to revisit and reflect on their experience.

We used laser cutter and 3D printer to create the physical path collector and incubator.

We also used Unity and Meta Quest 3 to create workable MR prototypes for testing and iterating.

Chronological Route

Scattered Information

Neglected Spatial Narratives

Information Panel

Navigation Bar

Conversational UI

Intuitive

Natural

Short learning curves

Info panels at the paintings and transitions are interactable with eye gazes.

The below info panel are placed with fossils. Hover, pinch and grab is considered in interactions with fossil with distance.

.gif)

.webp)

The primary navigation bars are designed as 'eggs' to cope with the 'life' topic, and also intrigue intuitive natural response and feedback. Secondary panel will be triggered only if the egg is selected through patting. To select the second panel's sub-category, users can pinch to select.

.webp)

User can directly talk with the iconic dinosaurs.

The goal of enhance engagement and learning leads to integrate the interface seamless in FOV without visual distractions from fossil display.

How to design the spatial position and angle of UI elements in AR spatial space?

We used a paper prototype for the initial spatial demo and a digital prototype to test the spatial experience.

.webp)

How to assess the feasibility and utility of multi-sensory interactions?

Goal of low-fi prototype:

- Test the scale and position of Egg UI in spatial space

- Test the “pat” and “distance grab” gesture usability

- Test the usability of interaction with skeleton and user flow